New Claude AI Models · Analyzing Cost & Improvements

Published 10-27-2024

Recently the company behind Claude AI, Anthropic, released a post on their new and improved AI models - A new Claude 3.5 Sonnet, and Claude 3.5 Haiku

I maintain an application that uses the Anthropic API with Claude Sonnet 3.5. This article will compare the cost and performance of the new models to gain a practical understanding of their improvements.

We’ll begin by reviewing how Claude AI can be used in an application through the Anthropic API. If you’re already familiar with the Anthropic API and Claude Models, feel free to skip to Analyzing Practical Usage.

How to Use the Anthropic API

To use Claude AI in your application, you’ll need an Anthropic API key and to install their library (respective to your language). Using the Anthropic API spends tokens. Tokens are purchased through the Anthropic Dashboard.

The API takes a model, prompt, and various token options. It then returns text formatted via Markdown. Here is an example using Typescript in a server-side function.

import Anthropic from "@anthropic-ai/sdk";

const anthropic = new Anthropic({

apiKey: env["ANTHROPIC_API_KEY"],

});

const msg = await anthropic.messages.create({

model: "claude-3-5-sonnet-20241022",

max_tokens: 1500,

temperature: 0,

system: "Prompt given to AI each time",

messages: [

{

role: "user",

content: [

{

type: "text",

text: "The users input",

},

],

},

],

});While this may be enough to help you understand how the API works, to implement the API in your own application, see these resources:

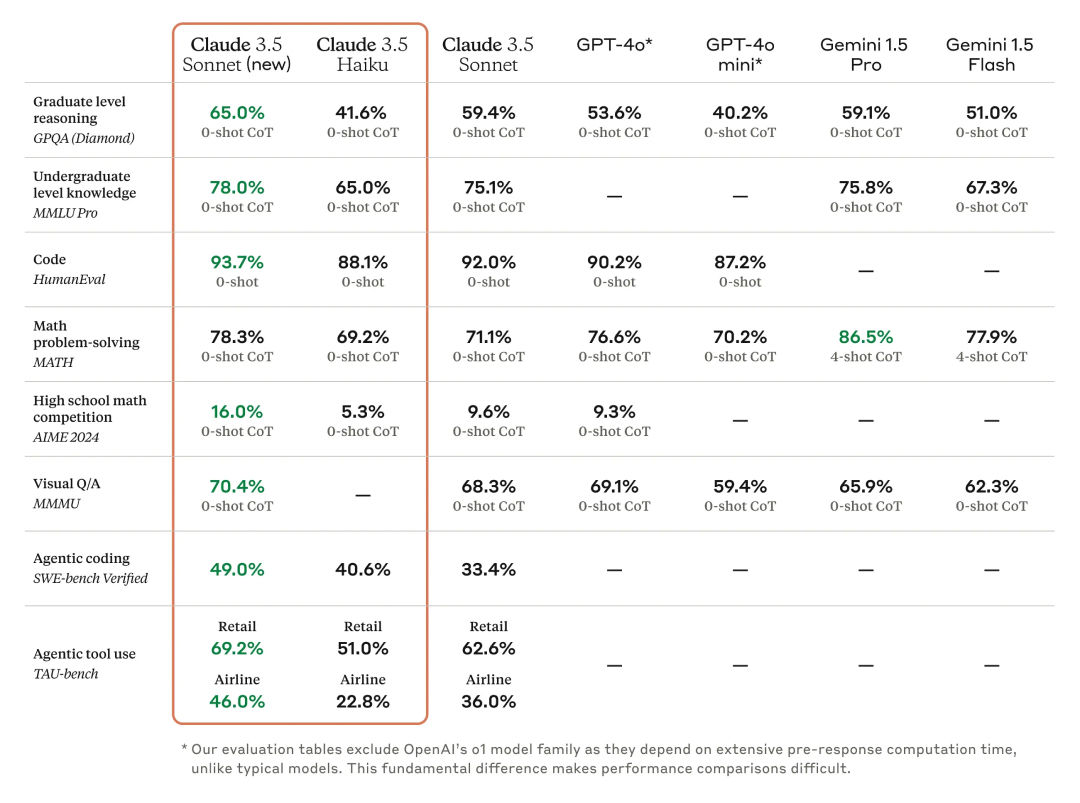

Overview of Cost - Speed - Intelligence

Anthropic provides 3 AI models. The new models are Claude Sonnet 3.5 (New) (available now) and Claude Haiku 3.5 (not released).

- Claude Sonnet 3.5: by price and intelligence, the overall best option.

- Claude Opus 3.0: an expensive, large, and intelligent model.

- Claude Haiku 3.0: extremely fast and cheap, but lacks capabilities.

New Model Breakdown

Claude 3.5 Sonnet (New)

- $3 per million tokens of input

- $15 per million tokens of output

- Most intelligent model

- “Fast”

Claude 3.5 Haiku

- Not yet released (although has benchmarks)

- $0.25 per million tokens of input

- $1.25 per million tokens of output

- Less intelligent

- “Fastest”

New Model Pricing

The prices respective to each model have not changed. However, depending on complexity of the task, improvements to Claude 3.5 Haiku creates potential for replacing Sonnet as a more affordable alternative. - (More on this later)

How fast is fast? How intelligent is the most intelligent? And what does 1 million tokens equate to? - We’ll analyze practical measurements and capabilities of Claude AI through a real-world application.

Analyzing Practical Usage

Without trying to make this sound like one big ad, I’ll keep this very short. My application is a note-taking app that can generate a group of Markdown notes using the Anthropic API.

The System Prompt

This is a condensed version of my system prompt using Claude Sonnet 3.5.

Given a topic, generate one notebook of study material as 3-5 separate notes formatted with Markdown.

- Highlight: Key words as fill in blanks

- Explain: Provide deeper context and connections

- Add Learning Elements: Key terms, code, and diagrams (if applicable)

- Maximum length: Do not exceed 225 words per note

The Results

Generating one notebook (or five notes):

- Uses about 1500 tokens

- Costs about $.02 (2 cents)

- Runs for about 30 seconds

- Has very high accuracy (caveat, links are often incorrect or broken)

In other words, $15 or 1 million tokens, is about 665 notebooks (3325 notes). And would take about 5 hours & 30 minutes to burn through.

Analyzing Improvements

When AI companies release benchmarks of new AI models, those percentage improvements are often determined by standardized tests or specific challenge datasets. Instead, we’ll take a more subjective approach and compare the results of the feature mentioned in the usage analysis.

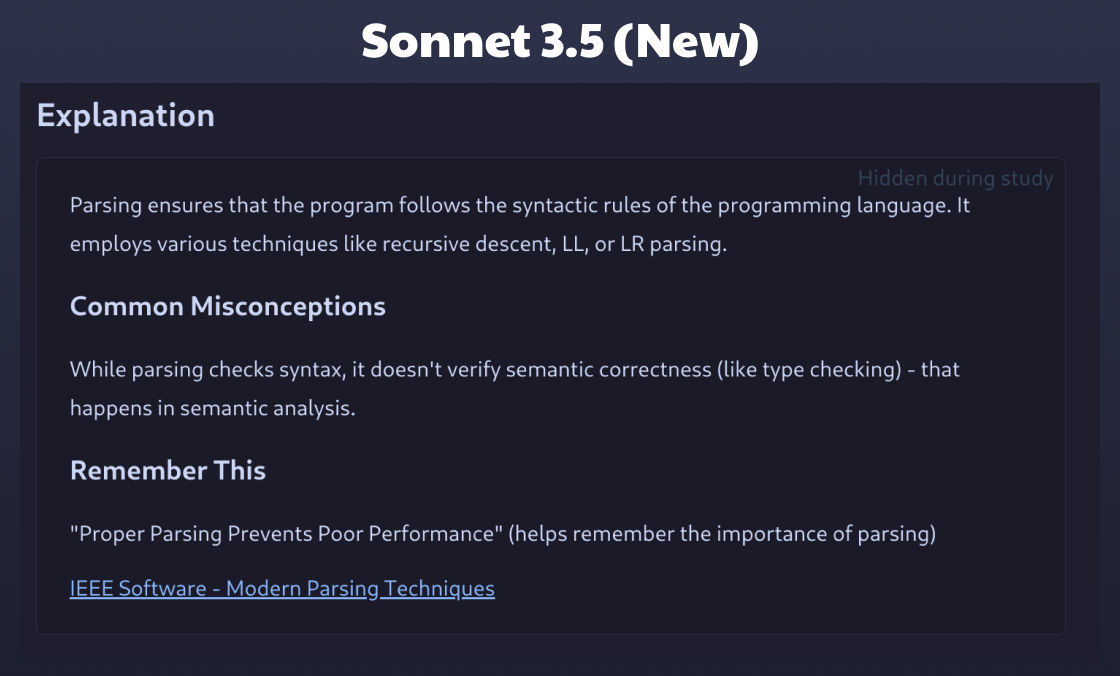

Sonnet 3.5 (New)

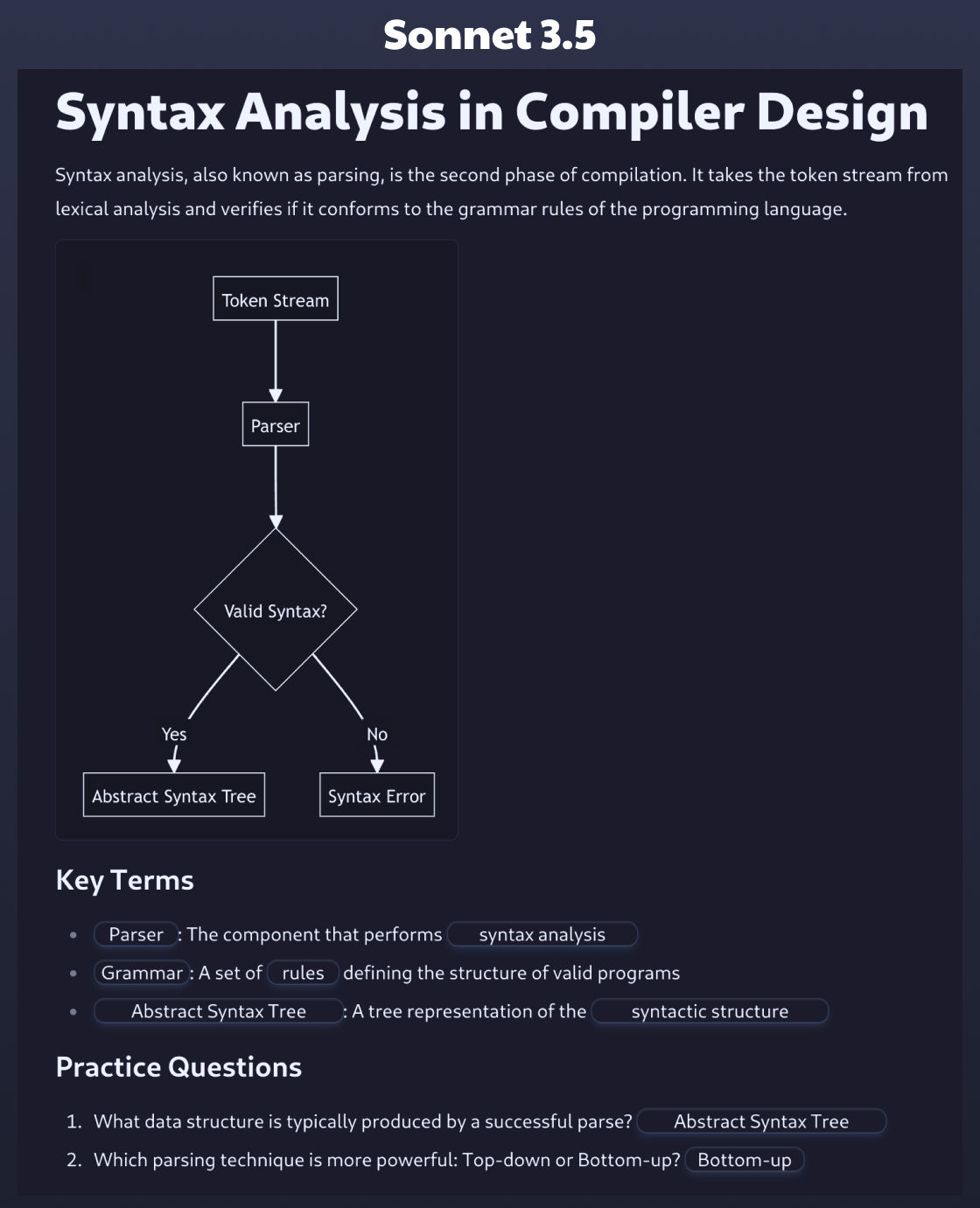

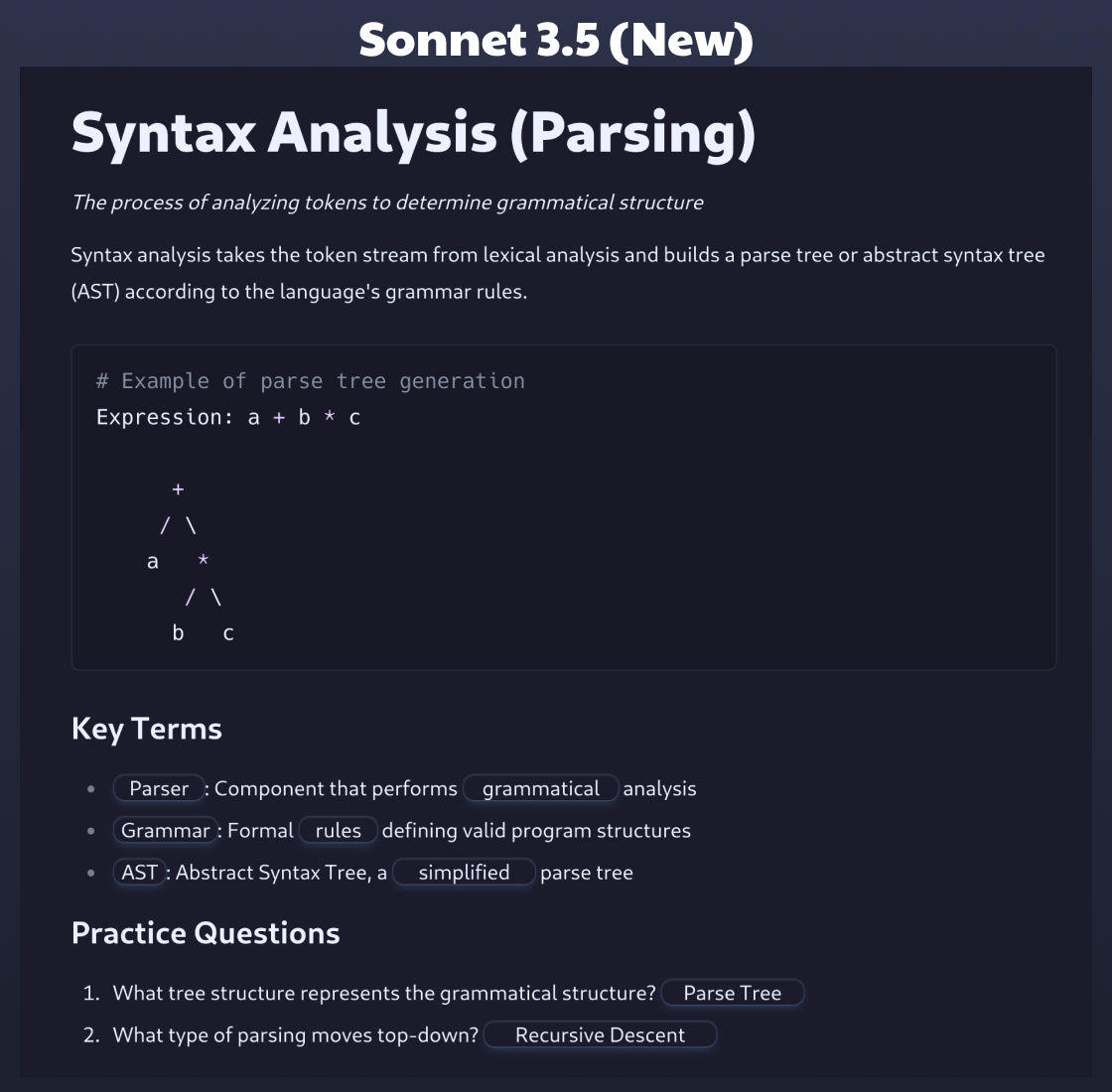

I ran comparisons on numerous topics in academia and computer science. To keep this article brief, we’ll compare one set of generated notes on Compiler Design Techniques.

The two models handle this note slightly differently. The old model builds a flow-chart of how syntax analysis works. The newer model gives an example of a parse tree. Outside of that, they provide a lot of the same information with a few important differences.

Sonnet 3.5 (New) sees the big picture. With no additional prompting, it adds sub-headings to each note that explain it’s place in the overall subject. It understands the means of the topic, and not just what the topic is. - The old model rarely does this.

Sonnet 3.5 (New) can follow many rules consistently. Fill in the blanks with multiple words are often very difficult to guess. When the old model might group 3 words into a fill in the blank (or mush two words together), the new model trends towards clearly using 1-2 words.

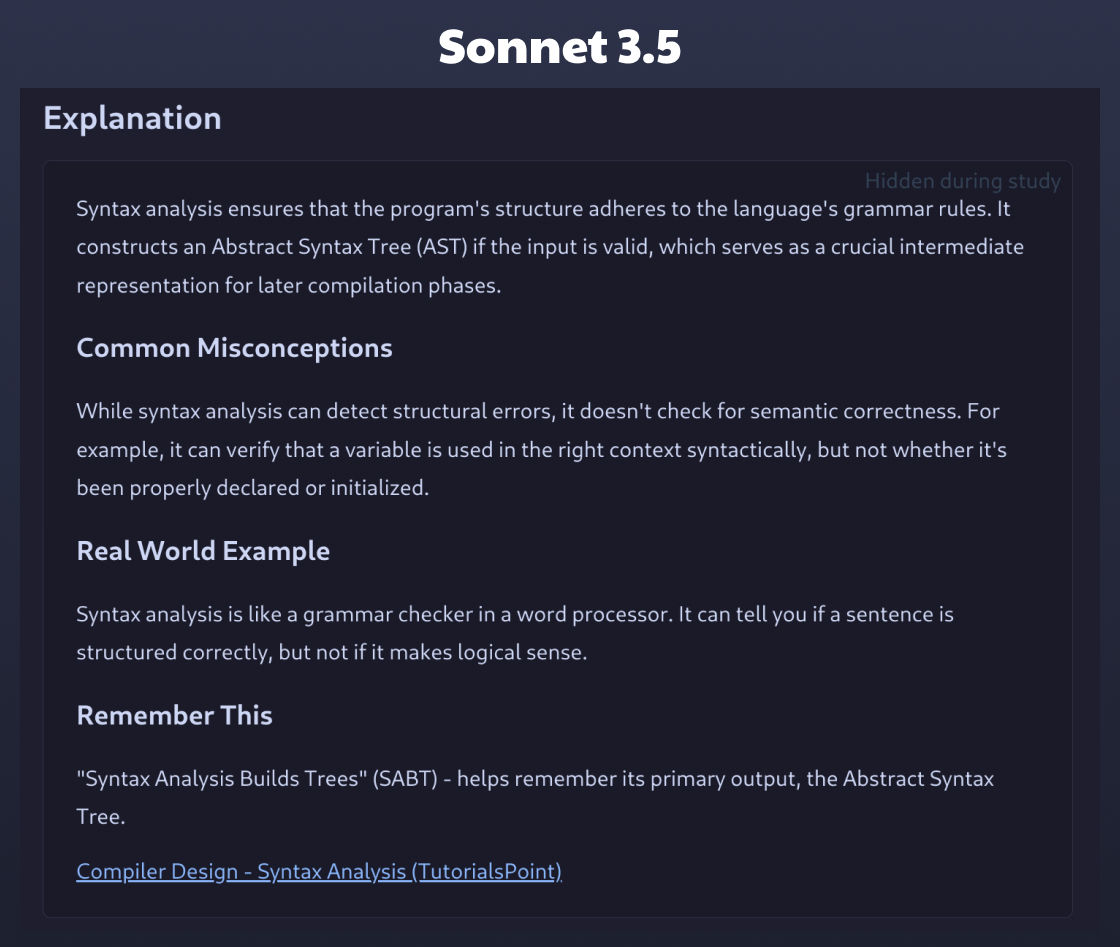

Sonnet 3.5 (New) handles conditional rules better. Learning elements should only be applied conditionally. Mnemonics, Real World Examples, Common Misconceptions. The new model does a better job of mix and matching 1-3. The old model often applies all three regardless of length or quality.

Sonnet 3.5 (New) breaks less rules. The explanation given in the old model may provide more context. However, it breaks an explicit rule about maximum length to do so. The new model will be brief and omit redundant information to maintain rules.

New Model Drawbacks

Relative to the old model, I noticed Sonnet 3.5 (New) required updates to my system prompt.

Without additional explicit structure, it would at times generate notes on unrelated topics, or omit text crucial to parsing.

For reasons like this, the Anthropic team suggests hard-coding the model version in your code, for example claude-3-5-sonnet-20241022

Haiku 3.5

The current Haiku 3.0 model cannot perform this task well. Important rules seemingly never work. Such as “No more than 2 fill in the blanks per line” or “Add code examples, diagrams, or formulas”, and “Wrap the explanation between a set of dashes”.

In other words, using Haiku 3.0 wasn’t a viable option for any moderately complex task. This is what makes Haiku 3.5 so exciting. Developers will be able to weigh the trade-offs and implement complex features that are faster and significantly cheaper.

The Haiku 3.5 model is not yet released (as of October 26th). Based on it’s benchmarks, its performance is much closer to the old 3.5 Sonnet model.

“Claude 3.5 Haiku is particularly strong on coding tasks. For example, it scores 40.6% on SWE-bench Verified, outperforming many agents using publicly available state-of-the-art models—including the original Claude 3.5 Sonnet and GPT-4o.”

This means that generating 1 notebook or 5 notes may only cost around $.002 (a fraction of a cent). The price of using Haiku 3.5 would be over 10x cheaper and hypothetically generate much faster.

Conclusion

Overall, I think Claude 3.5 Sonnet (New) is a noticeable improvement. And, I’m very excited to test Haiku 3.5 later this month. I’d like to move away from my app requiring paid subscriptions to generate notes. Currently, that’s too expensive to be feasible.

I’ve purposely omitted linking to my application throughout the post, to maintain the integrity of the writing. - I’m trying to build an ecosystem of learning tools, below you can check out some of my work.

- Flotes: Markdown note-taking built for learning.

- Tomatillo Timer: Highly configurable study timer that syncs to music.

- Better Commits: An open source CLI for making conventional commits.

- Discord Channel: Join other tech enthusiasts, students, and engineers.

All of these blogs posts and projects are maintained outside of my work at Cisco Systems as a Senior Software Engineer. 100% of the writing, development, and funding are done by myself. - I appreciate any shares or shout-outs, thanks for reading! :)